|

Hi! I am with Apple AIML. Previously, I was a scientist at A*STAR's Centre for Frontier AI Research (CFAR). I completed my Ph.D. at the National University of Singapore (NUS) in 2023, fortunately supervised by Jiashi Feng and Xinchao Wang. My research specializes in transfer learning and representation learning for computer vision. Before my Ph.D., I graduated with distinction in electronic engineering from Nanjing University. |

|

|

My research interests lie in generalizable and trustworthy deep learning with minimal human annotations. Specifically, I'm interested in transfer learning and representation learning, covering but not limited to the following topics:

|

|

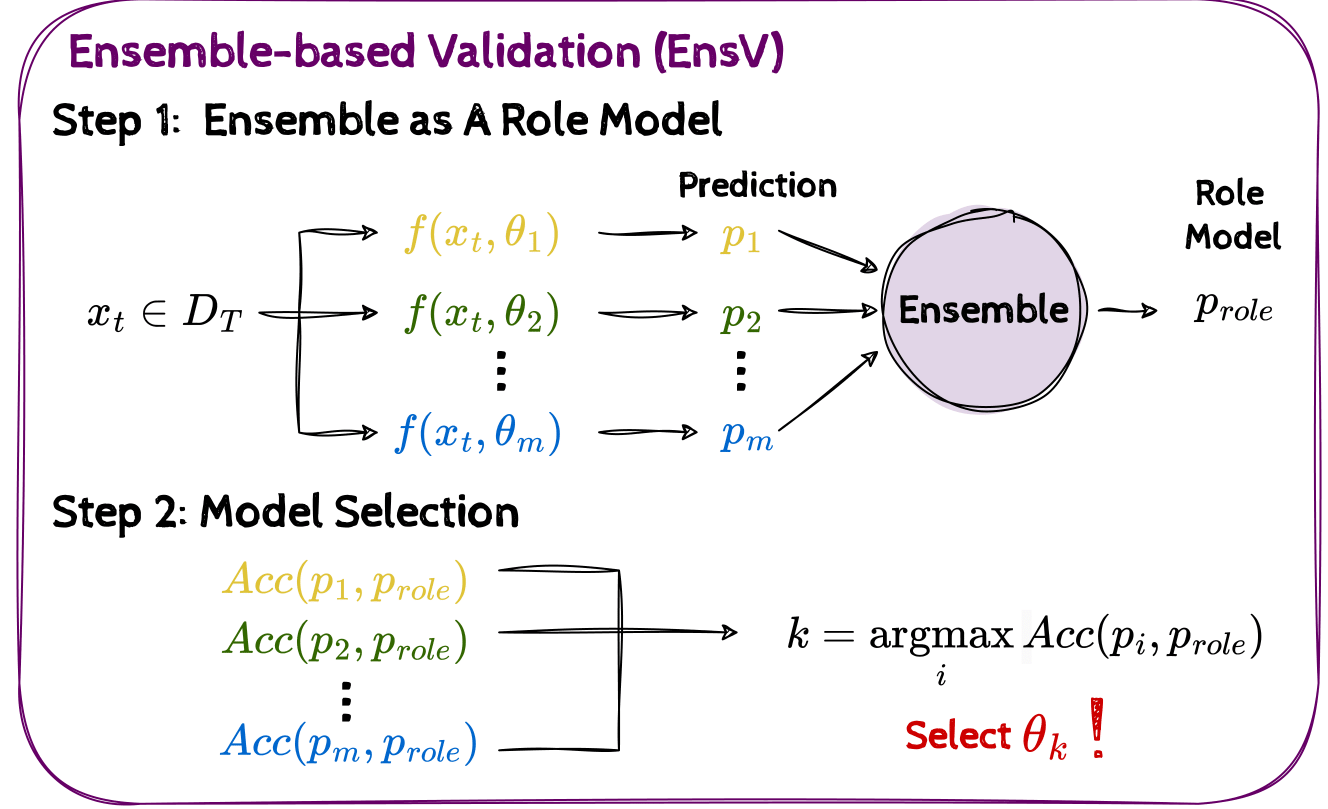

Dapeng Hu, Mi Luo, Jian Liang†, Chuan-Sheng Foo Advances in Neural Information Processing Systems (NeurIPS) Datasets and Benchmarks Track, 2024. A four-pages short version is in NeurIPS Workshop on Distribution Shifts, 2023. arXiv / code We proposed EnsV, a highly simple, versatile, and reliable approach for practical model selection in unsupervised domain adaptation. |

|

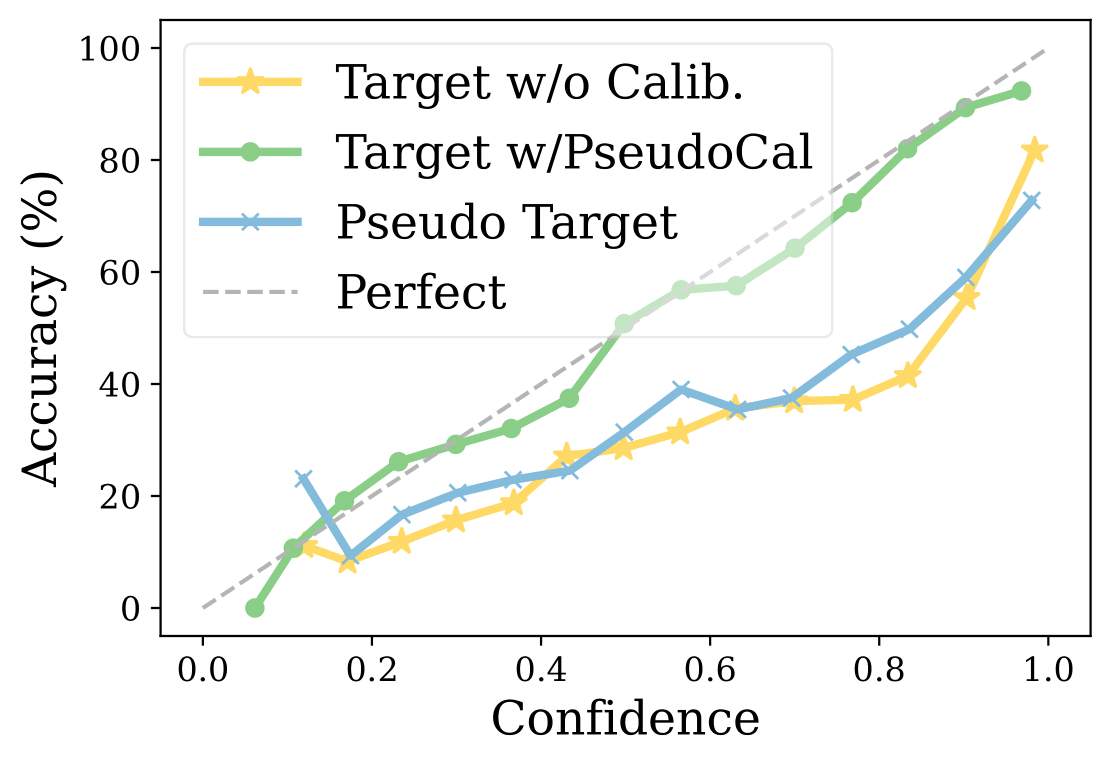

Dapeng Hu, Jian Liang, Xinchao Wang, Chuan-Sheng Foo† International Conference on Machine Learning (ICML), 2024. A four-pages short version is in NeurIPS Workshop on Distribution Shifts, 2023. arXiv / code We proposed PseudoCal, a novel and versatile post-hoc framework for calibrating predictive uncertainty in unsupervised domain adaptation. |

|

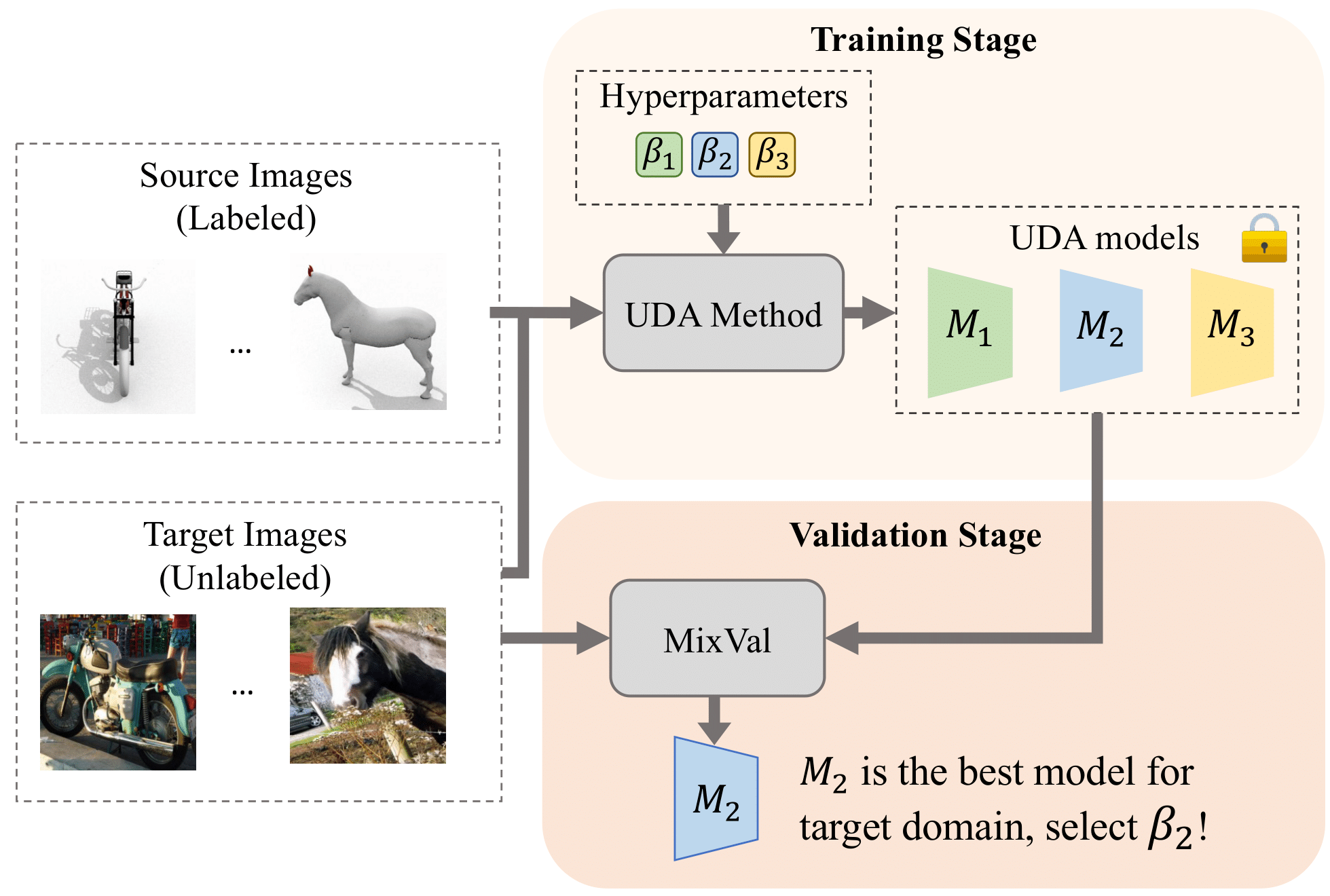

Dapeng Hu, Jian Liang†, Jun Hao Liew, Chuhui Xue, Song Bai, Xinchao Wang† Advances in Neural Information Processing Systems (NeurIPS), 2023. arXiv / code We proposed MixVal, a novel target-only validation method for unsupervised domain adaptation with state-of-the-art model selection performance and improved stability. |

|

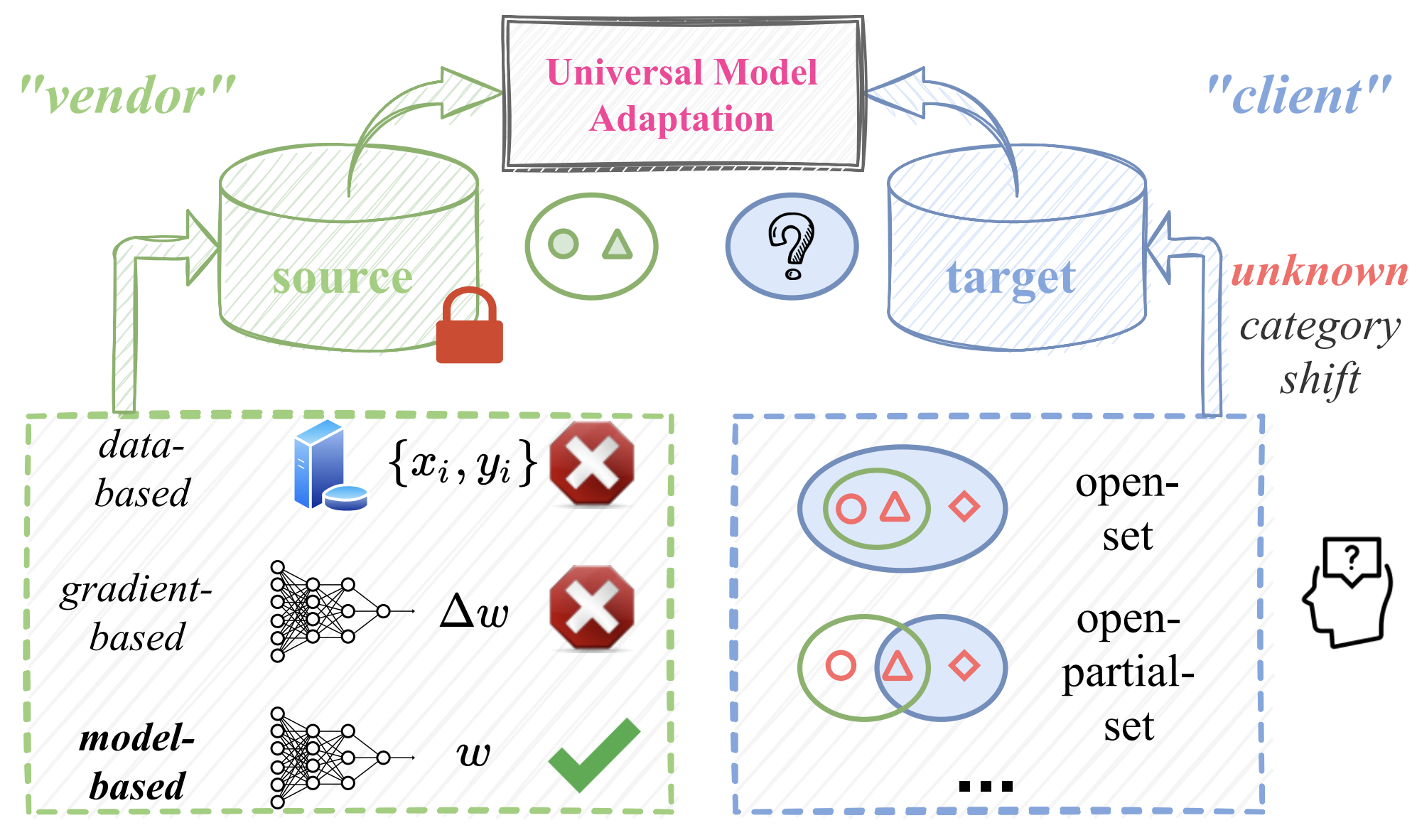

Jian Liang*, Dapeng Hu*, Jiashi Feng, Ran He Technical report arXiv We proposed a novel and effective method UMAD to tackle realistic open-set domain adaptation tasks where neither source data nor the prior about the label set overlap across domains is available for target domain adaptation. |

|

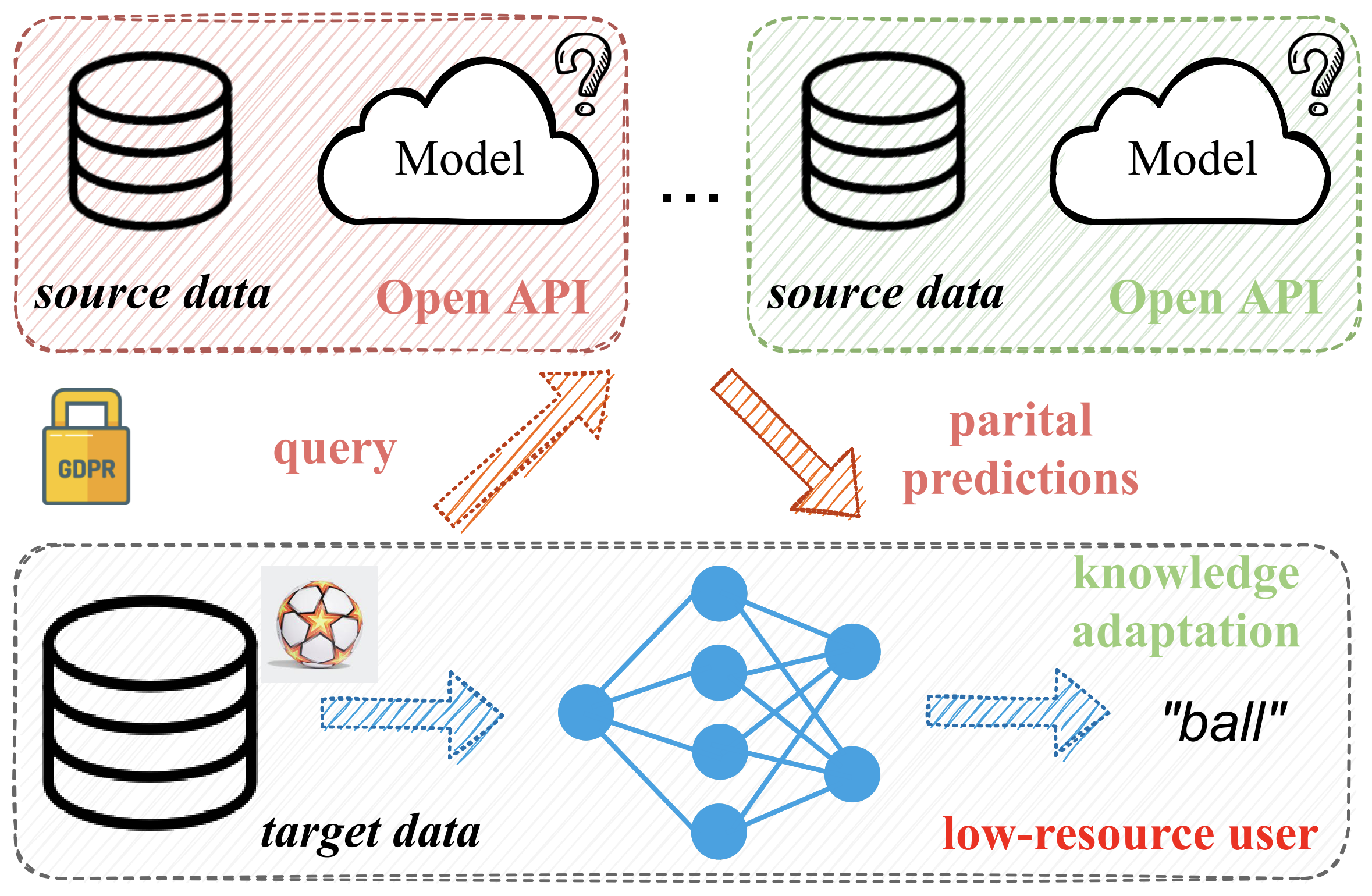

Jian Liang, Dapeng Hu, Jiashi Feng, Ran He IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), 2022. Oral arXiv / code We studied a realistic and challenging domain adaptation problem and proposed a safe and efficient adaptation framework (DINE) with only black-box predictors provided from source domains. |

|

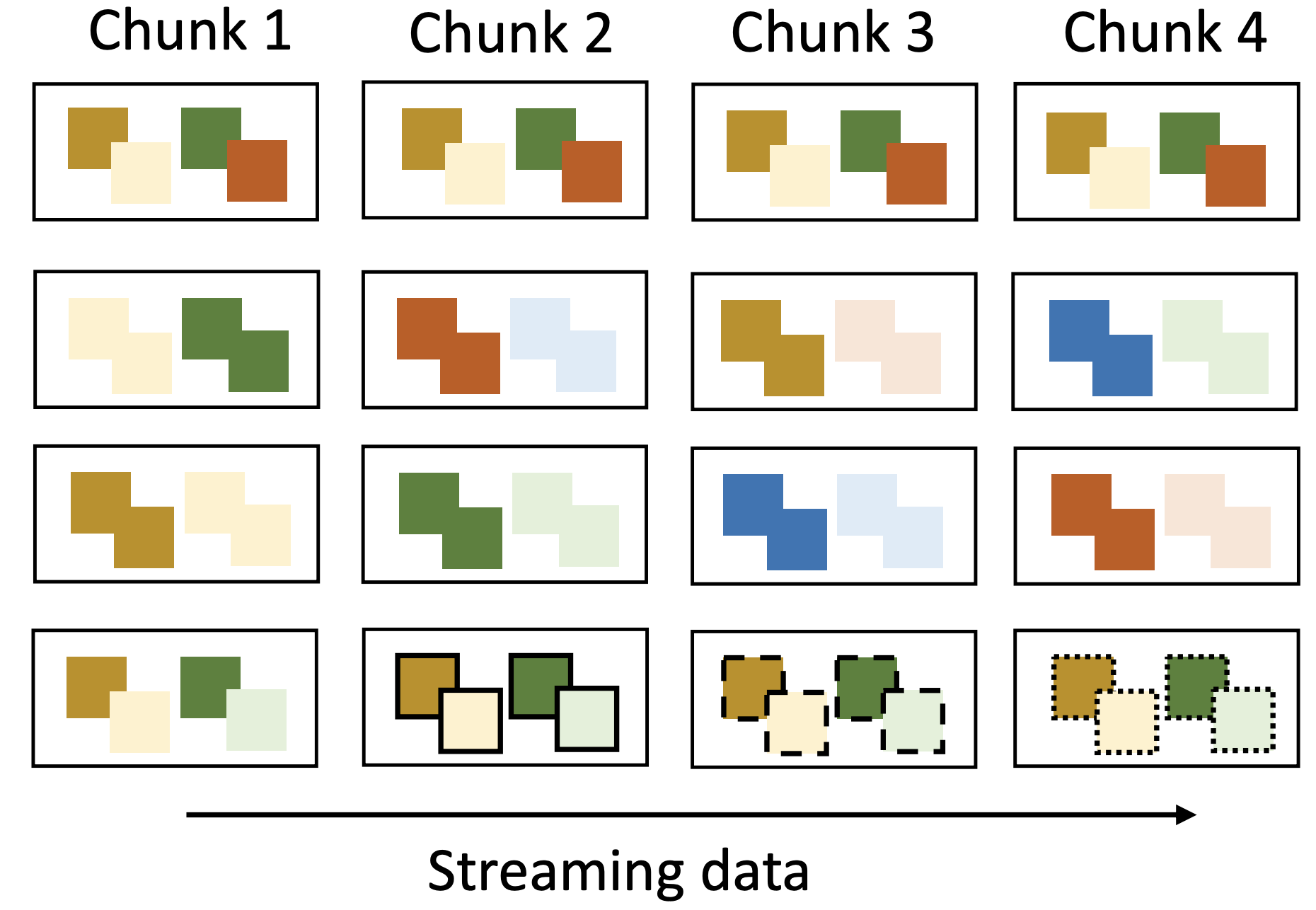

Dapeng Hu*, Shipeng Yan*, Qizhengqiu Lu, Lanqing Hong†, Hailin Hu, Yifan Zhang, Zhenguo Li, Xinchao Wang†, Jiashi Feng International Conference on Learning Representations (ICLR), 2022. arXiv We conducted the first thorough empirical evaluation to investigate how well self-supervised learning (SSL) performs with various streaming data types and diverse downstream tasks. |

|

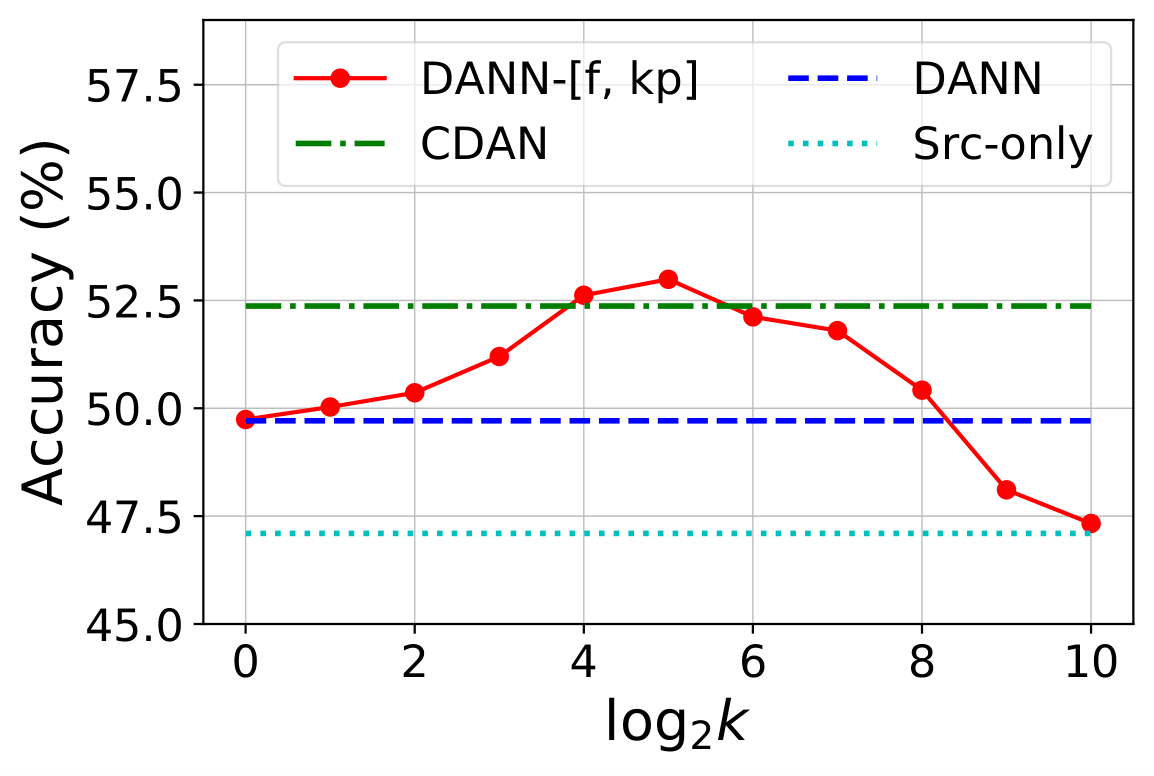

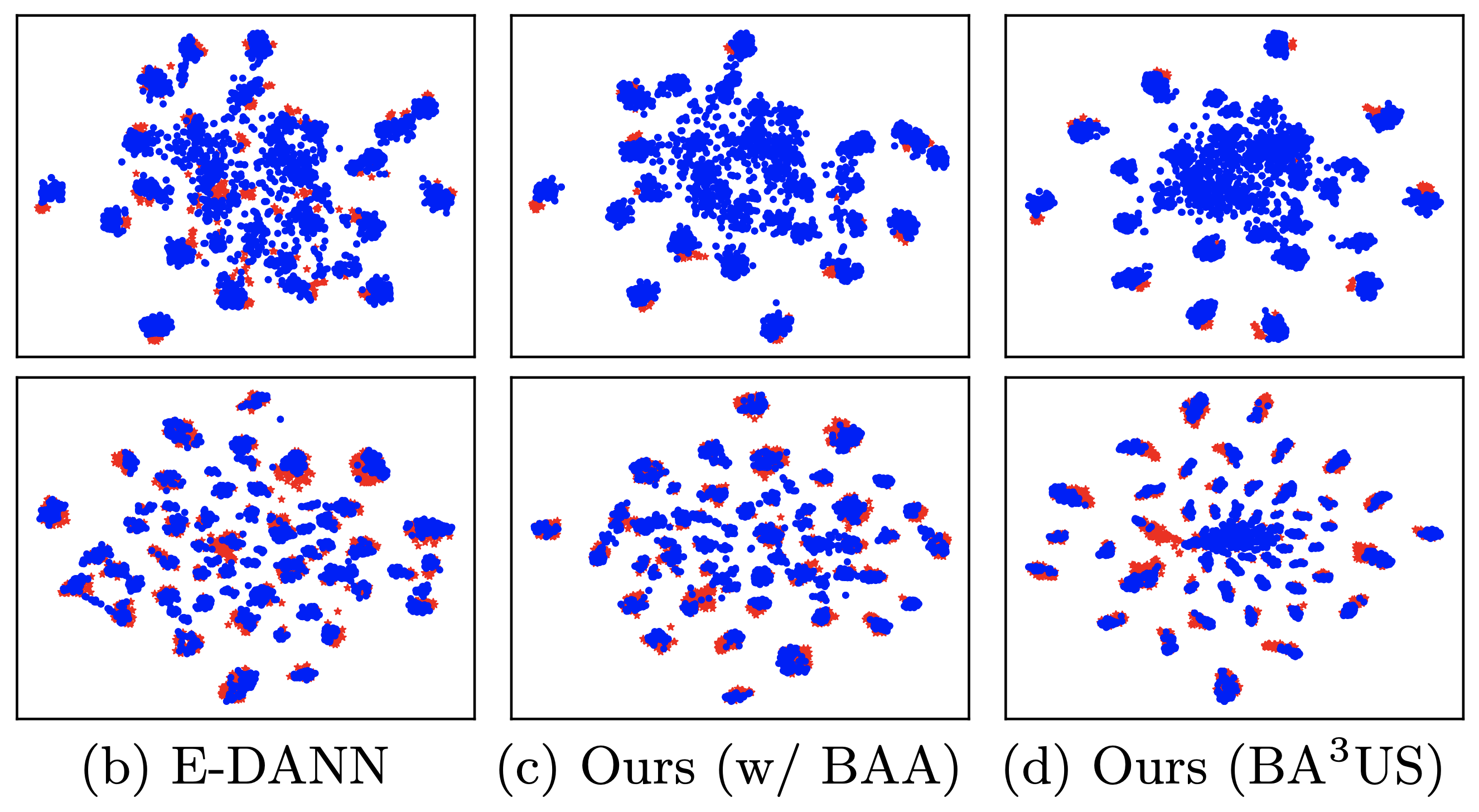

Dapeng Hu, Jian Liang†, Hanshu Yan, Qibin Hou, Yunpeng Chen IEEE Transactions on Image Processing (TIP), Volume 30, 2021. arXiv / code We proposed a novel, efficient, and generic conditional domain adversarial training method NOUN to solve domain adaptation tasks for both image classification and segmentation. |

|

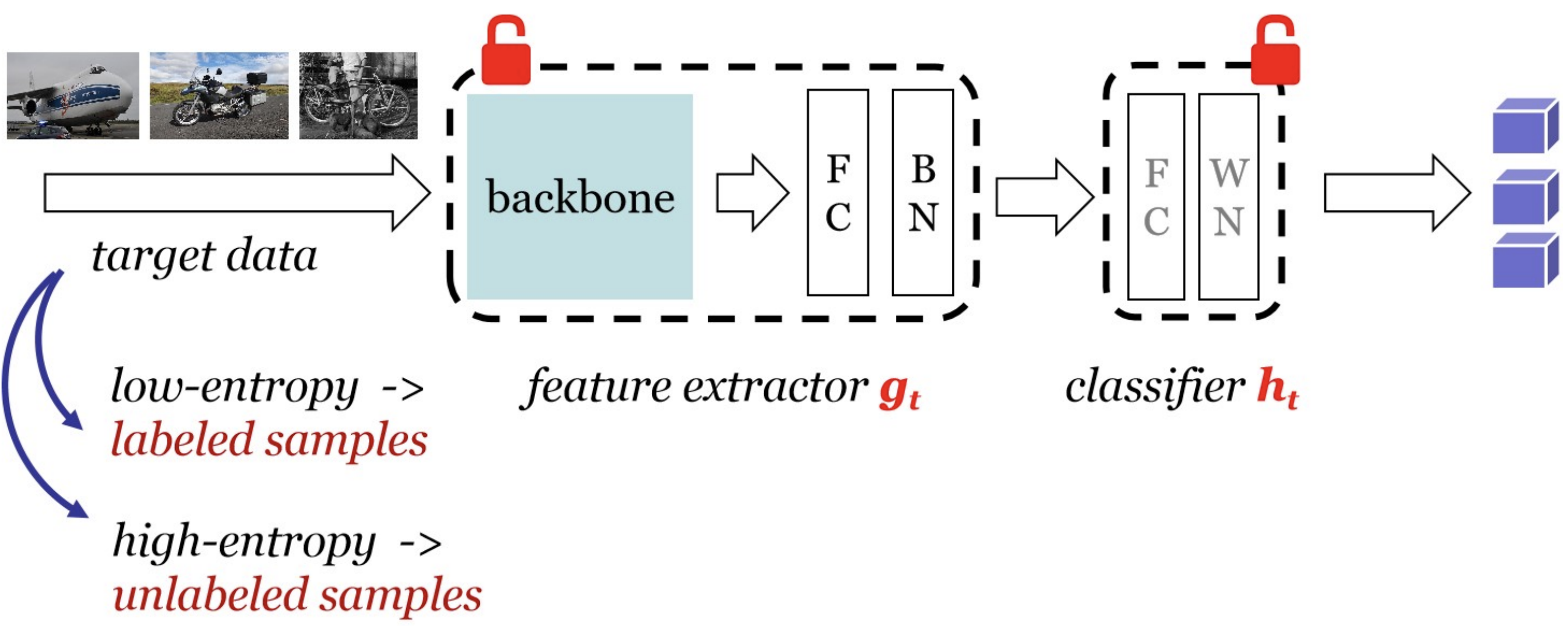

Jian Liang, Dapeng Hu, Yunbo Wang, Ran He, Jiashi Feng IEEE Transactions on Pattern Analysis and Machine Intelligence (TPAMI), 2021. arXiv / code We enhanced SHOT to SHOT++ with an intra-domain labeling transfer strategy and achieved even better source data-free adaptation results than state-of-the-art data-dependent results. |

|

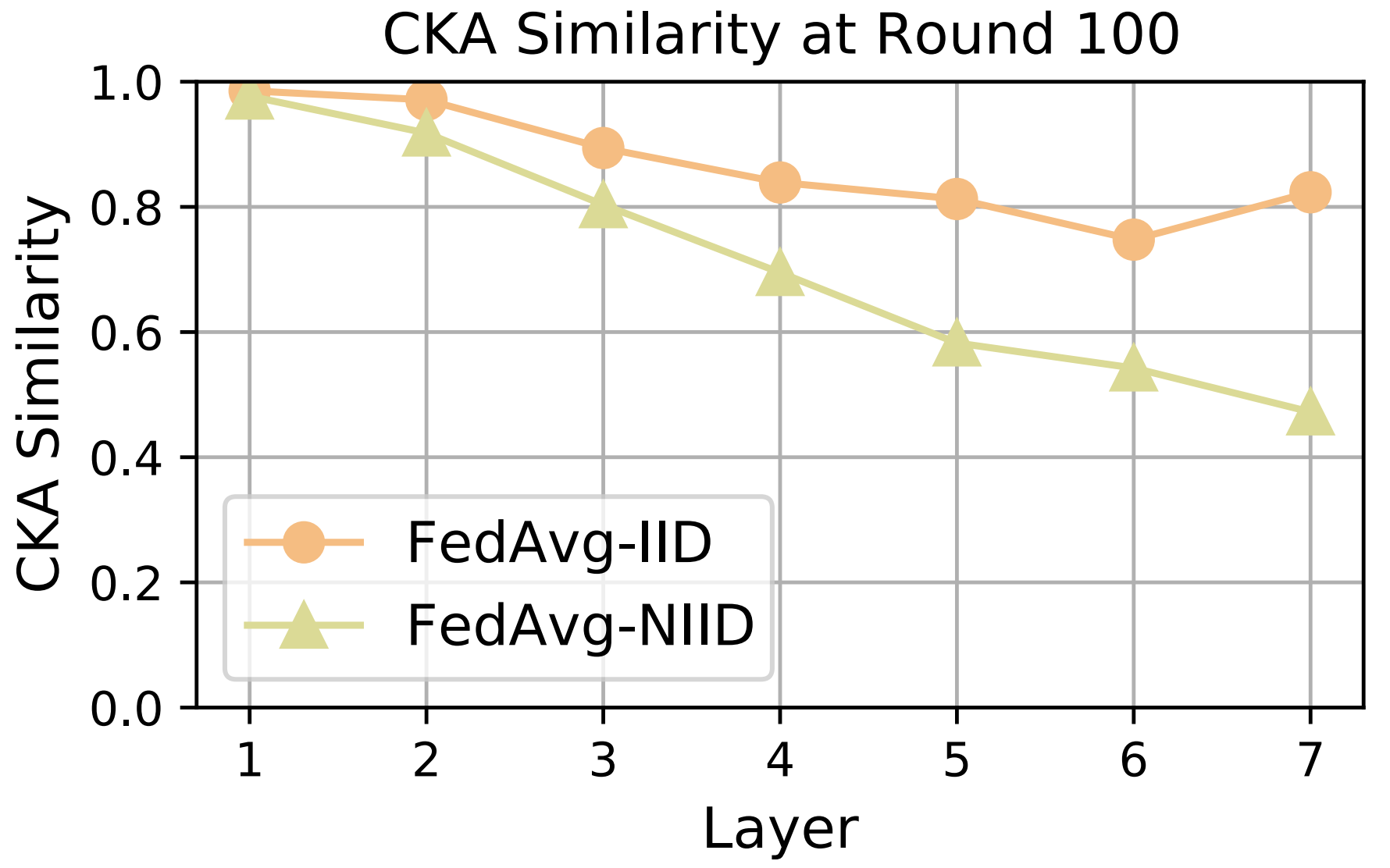

Mi Luo, Fei Chen, Dapeng Hu, Yifan Zhang, Jian Liang, Jiashi Feng Advances in Neural Information Processing Systems (NeurIPS), 2021. arXiv We proposed CCVR, a simple and universal classifier calibration algorithm for federated learning. |

|

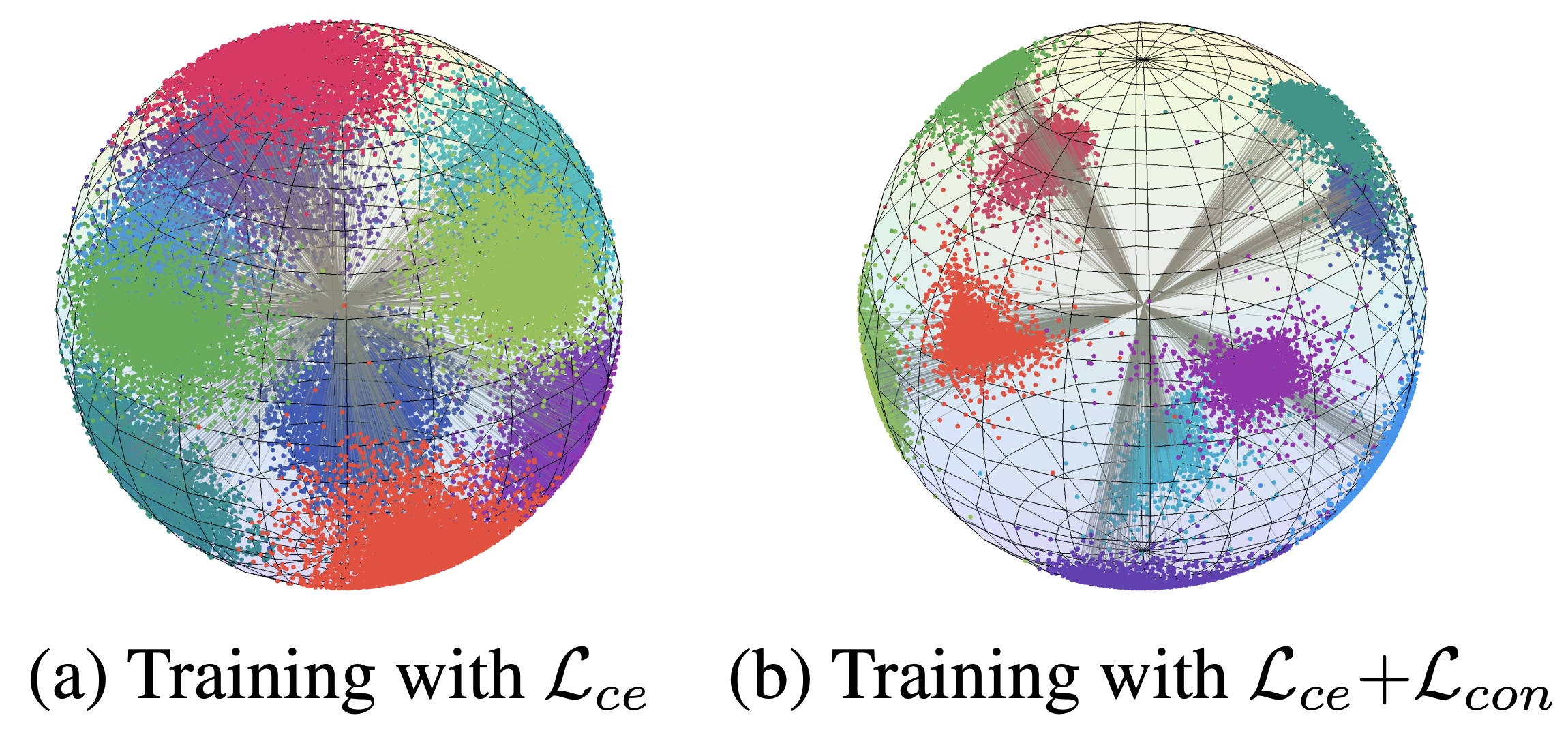

Yifan Zhang, Bryan Hooi, Dapeng Hu, Jian Liang, Jiashi Feng Advances in Neural Information Processing Systems (NeurIPS), 2021. arXiv / code We proposed a theoretically and empirically promising Core-tuning method for fine-tuning contrastive self-supervised models. |

|

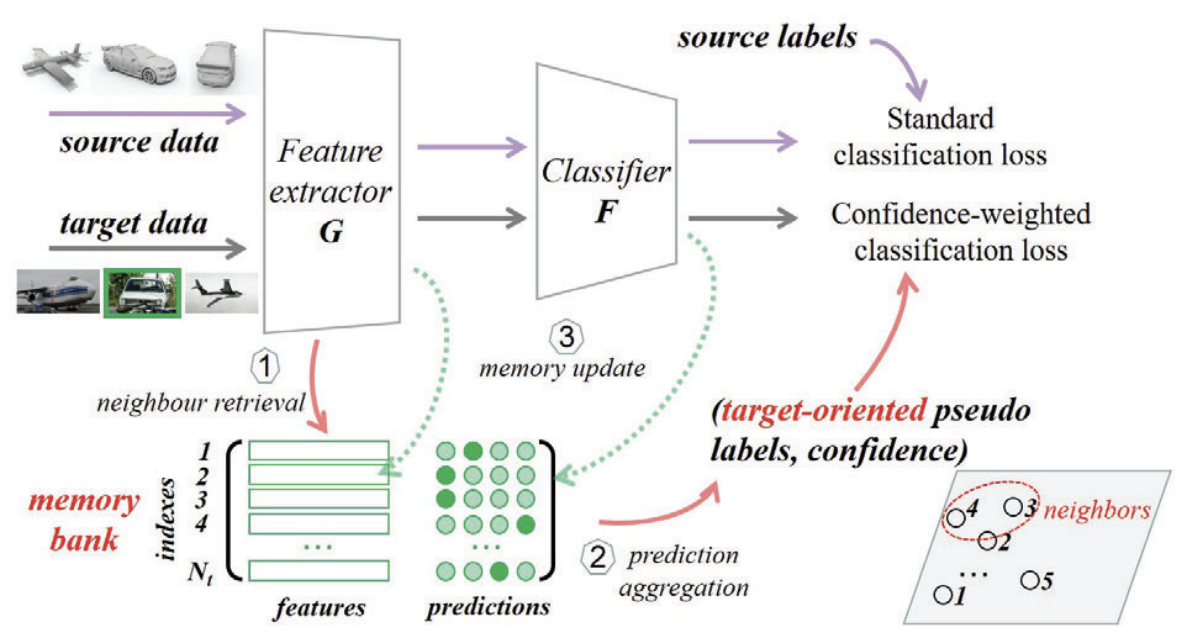

Jian Liang, Dapeng Hu, Jiashi Feng IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), 2021. arXiv / code We proposed ATDOC, a simple yet effective framework to combat classifier bias that provided a novel perspective addressing domain shift. |

|

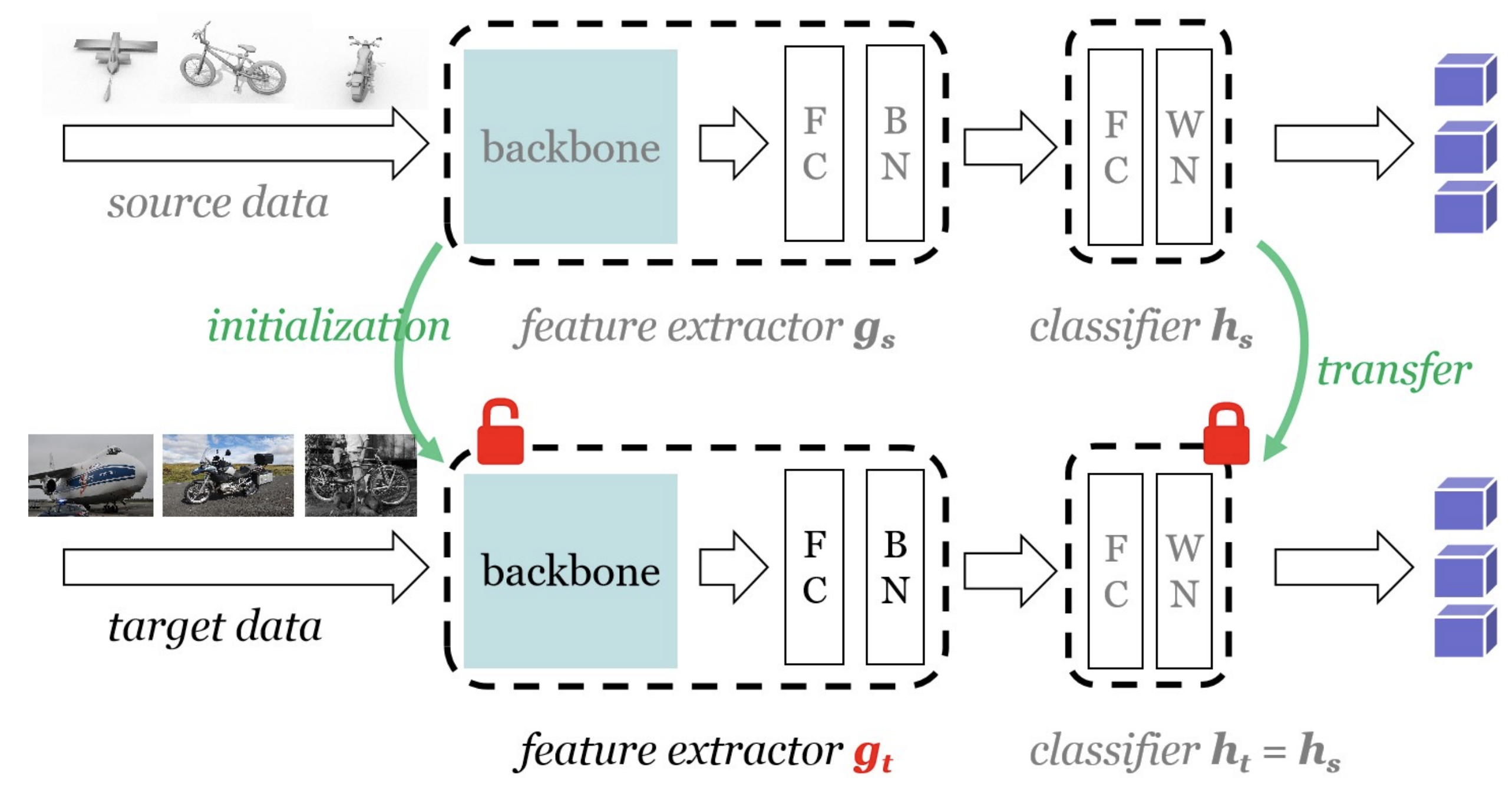

Jian Liang, Dapeng Hu, Jiashi Feng International Conference on Machine Learning (ICML), 2020. arXiv / code We were among the first to look into a practical unsupervised domain adaptation setting called "source-free" DA and proposed a simple yet generic representation learning framework named SHOT. |

|

Jian Liang, Yunbo Wang, Dapeng Hu, Ran He, Jiashi Feng European Conference on Computer Vision (ECCV), 2020. arXiv / code We tackled partial domain adaptation by augmenting the target domain and transforming it into an unsupervised domain adaptation problem |

|

Journal Reviewer: TPAMI, IJCV, TIP, TMLR, TKDE Conference Reviewer: ICML 2021-2023, NeurIPS 2021-2024, ICLR 2022-2025, CVPR 2022-2025, ICCV 2023, ECCV 2024, AISTATS 2025, AAAI 2025 Head TA, EE6934: Deep Learning (Advanced), 2020 Spring Head TA, EE5934: Deep Learning, 2020 Spring TA, EE4704: Image Processing and Analysis, 2019 Fall TA, EE2028: Microcontroller Programming and Interfacing, 2019 Fall |

|

|

|